04 ePA Shows How Not to Do Health Tech

The ePA is a massive privacy concern for patients receiving psychological treatment. Their data is open to any other doctor, even if they are working in a completely different field. Some experts warn against using the ePA due to this issue.

This week's main stories:

- Germany starts electronic patient record rollout. The ePA ("elektronische Patientenakte") has been in the works for 20 years. The full rollout is planned until October. Patients can now grant clinicians access to all their relevant medical information. But data protection controls are too weak. Especially sensitive data on mental health, like diagnoses and medication, is at risk.

- ChatGPT is the world’s busiest therapist. A study by the Sentio University and the Sentio Counseling Center found that 48.7% of those who self-report mental health issues seek therapeutic help from LLMs. I distilled down all the relevant statistics for founders, clinicians, and policymakers.

- Please diagnose my environment! Environmental toxins have significant impacts on mental health, with various pollutants linked to cognitive impairment and psychological disorders. Air pollutants, heavy metals, pesticides, and noise pollution are associated with anxiety and other conditions. Who is building a company around this?

Odd Lots:

- Meta will flood you with fake friends. AI friends to be exact. Zuckerberg speaks of fulfilling Americans' "demand" for friendship. Futurism outlines what will become the next level of social media addiction.

- Psychologists can thrive in Start-ups. A guide from the American Psychological Association for those considering a career switch.

- ChatGPT will say yes to anything. Sycophancy (overly agreeableness) in ChatGPT-4o has been a problem for a while now. Last week, the company issued a press release about rolling back an update after screenshots of chats where the LLM encouraged the user in not taking their psychotic medication vent viral.

01. German ePA(ss).

Why the new digital health record system should be used carefully in clinical psychology and psychiatry.

The ePA (elektronische Patienenakte) is finally rolling out in Germany. First spoken about almost 20 years ago, it is now finally becoming a reality. Here is an overview of the more recent history:

The only Reason I am showing this graphic is, that it tells you one thing: this was a bureaucratic mess. Still, the government owned gematik GmbH, who released this, simplified it down for the unassuming reader too much. They cut out any mention of the delays. The project was supposed to start in February of this year already. I guess, two months is not really worth mentioning after a 20-year-long process.

The rollout is also not immediate. Insurers and Providers only need to properly start using it until October. Software updates and additional training to use the tech seem like they could be done in less than 6 months, but I digress.

Now that it is becoming available, the question becomes: do you even want to use the ePA? Unfortunately, for people in psychotherapy or psychiatric treatment, probably the answer is no.

Due to how the ePA is structured, any doctor who gets access to your ePA will see all entries in it. So your dentist might look at the medication you are taking for some psychological disorder. This is extremely sensitive information that could be used maliciously. And data controls aren’t really granular. You can decide what information to delete from your ePA, but then it is deleted for everyone accessing it. Alternatively, you can restrict how long a practice or hospital has access to your records. Neither of those are really functional solutions.

It is important to note here that a therapists treatment notes are not put into the ePA. Good for security, bad for handing over treatment to the next expert when you move to a different therapist. In that case you still need to go and request a copy of your documentation from the old one to then hand over to your new therapist.

All of this might be less relevant than you might think, as the ePA is part of gematik's TI (Technical Infrastructure). The TI currently has very low usage rates amongst psychotherapeutical practices: Only 4 % (Green) report any usage.

It is good to see digitalization move forward in Germany at all. But realistically, this will not change much for those receiving treatment for psychological disorders due to privacy concerns and adoption. The outcomes of the ePA seem like a loose-loose to me in most cases: either you overshare information with practitioners that is not relevant to their field, or you undershare by actively opting out (either completely or specifically deleting certain entries) making the ePA redundant.

It is impressive to see such a mediocre solution for a fairly basic problem, that countries like Sweden, Finland, and Spain have solved already. Sweden has had an ePA-equivalent for 13 years now!

If you want to track the rollout of the ePA, the gematik has a live dashboard here. For some reason, there is a dip in adoption of the last few months. I would love if anyone could explain this.

2. ChatGPT is the World’s Busiest Therapist.

50% of respondents in a survey on mental health reported usage of ChatGPT for therapeutic needs.

A paradigm-shifting new study from Sentio University and the Sentio Counseling Center is shining a spotlight on a shift in how Americans are seeking mental health support. According to the survey, artificial intelligence, specifically general-purpose AI chatbots like ChatGPT, could now be the single largest provider of mental health support in the United States:

48.7% of respondents who both use AI and self-report mental health challenges are utilizing major LLMs for therapeutic support.

Let's take a look at the study through three different lenses: the founder's, the clinician's, and the policymaker's.

I. Most interesting stats for founders in the field:

⏱️ 64% of respondents have used LLMs for mental health support for 4+ months, showing stronger sustained engagement than typical digital mental health applications.

In the past, there was little evidence for long term usage of LLMs for mental health reasons. Whilst this says absolutely nothing about how good or helpful those interactions are, the retention is remarkable. In Germany, DiGAs (Digitale Gesundheitsanwendungen aka digital prescription treatments) are usually set up for 3 months only. This is a limiting factor for monetization and treatment. The survey result indicates that customer acquisition outside the DiGA-path might be quite the attractive alternative.

💵 [...] 61.1% of participants expressed a willingness to consider future mental health support from LLMs.

Whilst this is far from a guaranteed 64 % conversion rate for a paid solution, this is still a strong market signal. Awareness for this kind of (complementary) treatment is high, potentially reducing the cost of market education. More funds to be dedicated to developing better products, yay!

II. Most relevant stat for clinicians:

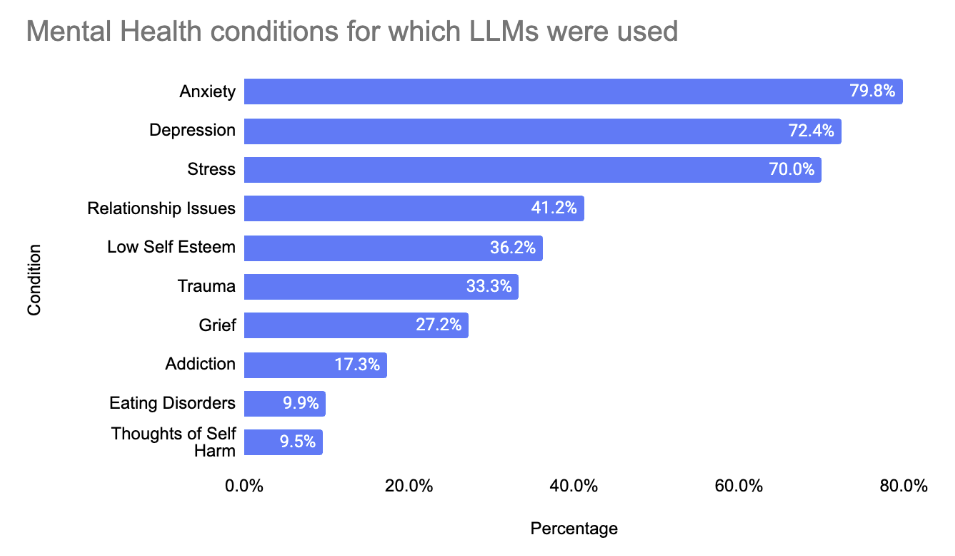

📈 80% use LLMs for anxiety management and 72% for depression support.

The most common disorders are not suprising here.

What is concerning here are the last 2 rows. Eating disorders can become very dangerous if not treated early enough. A LLM giving the wrong answers might increase the risk of mortality. The same goes without saying for self-harm. The numbers show that those are quite significant edge cases. Self-harm and suicide are closely related to most other conditions on the list, if severe enough. Signing up to help with Depression also means signing up to treat those even more sensitive use cases.

This chart emphasizes how being spot on with detecting those topics and getting a human in the loop need to be front and center with any new deployment.

III. For policymakers:

💸 90% cite accessibility and 70% cite affordability as primary motivations for using LLMs for mental health support.

If there was adequate human care provided, the market for an AI-therapist would be non-existent. This is a dream and will remain one forever, probably. Unfortunately, AI healthcare will be a market for the foreseeable future.

The authors' conclusion: AI will complement and not replace human therapy. I strongly support that perspective. But drawing that conclusion from usage insights is wrong. Evidence on effectiveness should be the deciding factor. There better be no AI therapist than a bad one.

Regardless, the fact that the technology has found such broad application for mental health purposes, and did so organically, is astounding. This highlights the massive need for better mental health care, to be filled by more human therapists and better supplemental technologies.

Whilst it is great news that people consider LLMs helpful in moments of mental distress, this interaction happening informally is a significant risk as well. Regulators should step in and provide better frameworks for healthy usage, in addition to more clinical research on what actually works.

Read the pre-print here.

Or see this for a more convenient summary:

3. Environmental Toxins and Mental Health.

Fixing your sleep might be irrelevant if you are constantly exposed to noise, bad air and TEMU-quality toxin-infused products.

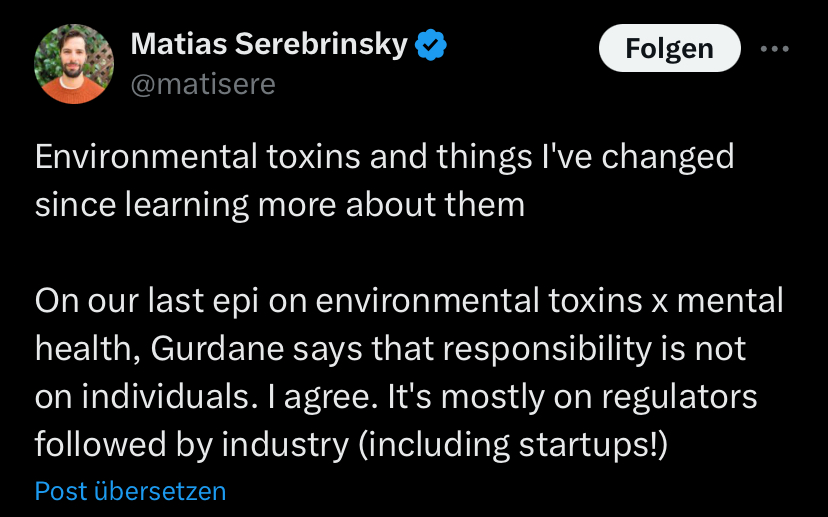

Recently, I stumbled across this post from Matias Serebrinsky, Cofounder at PsyMed Ventures:

Environmental toxins have significant impacts on mental health, with various pollutants linked to cognitive impairment and psychological disorders. Air pollutants, heavy metals, pesticides, and noise pollution are associated with anxiety, mood disorders, and psychotic syndromes (Ventriglio et al., 2020).

But how do I know that I am affected? And how can I track exposure and change my environment and behaviors to improve health again and prevent long-term damage?

Whilst there are multiple companies like Function Health, Superpower or InsideTracker all promise insights related to mood or mental state, they are not focussed on mental health.

And yes, no teflon, single use plastics etc. at my house. But how do I know the impact of things like that? With long term health decisions, anything that can be done to narrow the gap of understanding between decisio and outcome will increase adoption.

This could take many forms:

- A special testing kit.

- A done-for-you service screening your home and recommending a "treatment plan".

- A health tracking device.

Is anyone working on this? I assume it would require a lot of groundwork to establish the research basis to build such a company on, but the funding rounds of the aforementioned companies imply that there is quite a lot of money in the market.

I got plenty more ideas on the topic, shoot me an email!

Odd Lots: Meta fake friends, Sycophancy, Psychologists in Start-ups.

Food for thought to help you build better companies.

I. Meta will flood your feed with fake friends.

In a recent interview, Mark Zuckerberg talked about how the average American has fewer friends than they "need".

"There's the stat that I always think is crazy, the average American, I think, has fewer than three friends," Zuckerberg told Patel. "And the average person has demand for meaningfully more, I think it's like 15 friends or something, right?"

This is a "people don't get as much Serotonin as they want, let's give them crack" level argument.

Futurism provides a great summary of why a push for more social interaction with LLMs is, indeed, a horrible idea.

Recently, 404 Media published a piece on Meta allowing users to create chatbots claiming to be licensed therapists. Clearly, mental health and the loneniness crisis is their only motivation to create products!

Read the full story here:

II. Sycophancy in ChatGPT-4o

AI models tend to agree to the users inputs often times. They are affirmative and support the user's take (within the boundaries set by the provider). Last Monday, OpenAI issued what reads as an unspecific apology over the most recent update to the 4o model that made it overly agreeable, or sycophantic. This comes as screenshots from chats make the rounds where ChatGPT supports clearly psychotic delusional behavior.

The announcement doesn’t contain many specifics on what happened and what will be changed to prevent sycophancy in the future.

This is the first instance of an AI model maker apologizing for their product’s personality. One thing that the announcement highlights is the conflict of interest companies face when designing their products. Make it more agreeable and the users will have a better experience, increasing spending. Make it honest, more neutral, and ethical, and users might be turned away. Agreement and affirmation are addictive. The evolution of social media shows that companies don’t hesitate in profiting off of addiction.

The press release: https://openai.com/index/sycophancy-in-gpt-4o

III. How Psychologists Can Thrive in Start-ups.

This APA article doesn’t simply tell you to be the next head of mental health at a random e-commerce startup, but specifically introduces roles in which Psychologists can thrive due to their formal training. Helpful for all those wanting to go into UX, product management, marketing, data science, and most importantly those looking to found their own company.

Alright, see you next week!

Best

Friederich

Not in yet? This passion project is about connecting and building great solutions together.