05 – Reviving Mindstrong, Mental Health Highlights from the Stanford AI Index

Mindstrong promised to build the digital "smoke alarm" for mental illness. They raised 160M $ at a 660M $ valuation on this idea. Growth and Revenue pressures ruined the company. I dissect the company's collapse and explain why the idea might still be alive.

This week's top stories:

- Reviving a business killed by VCs. Mindstrong promised to build the digital "smoke alarm" for mental illness. They raised 160M $ at a 660M $ valuation on this idea. Growth and Revenue pressures ruined the company. I dissect the company's collapse and explain why the idea might still be alive.

- Mental Health in AI. The Stanford AI Index Report is one of the most important resources for understanding AI's real world impact. I highlight findings and developments relevant to mental health tech. Most of them hide a clear business opportunity.

The Odd Lots – Starting your week fresh:

- 📈 The Venture Capitalist Playbook is Breaking Therapy – A take on business driving the good intentions out of mental health startups.

- ➕ Labor Bureau of Statistics – Counseling amongst the fastest growing professions in US.

- 💍 Oura Makes Sensors Mainstream – a VC firm's take on why sensing technology is a massive opportunity.

I. Mindstrong: How the 660M $ digital "smoke alarm" for mental illness failed.

And how the idea could be revived today.

Whilst the company didn’t work out at all, the original idea of the “smoke alarm” for mental illness is still alive in my view. Let’s recap quickly:

Mindstrong initially started out with passive sensing to detect mental illness onset. An innovative product that attracted significant funding. They later stepped down to app-based mental health care. This was part of the product from day one, but all innovative parts of their product were killed off. Mindstrong failed due to internal struggles, funding issues and layoffs (including the founder Tom Insel’s departure). Additionally, they went to market with a half-backed product. Their MVP was not good enough. Funding and growth pressures eventually forced them to backtrack and provide what is a commodity product today: online therapy.

The company shut down in 2023 and what remained of it was sold to SonderMind.

I want to focus on the idea before the pivot. There are dozens of App-based mental health solutions today. The original idea was way more unique (and hard). Why did it not work?

The initial promise falls apart.

Mindstrong created software that monitored how users typed and interacted with their phone within their therapy app, claiming these digital patterns could serve as early warning signs of mental health issues like depression. Essentially, this was an automated alert system for psychological distress. Generated insights could then be used to provide early care.

Basically, the company would analyze chatting behavior when patients used the Mindstrong app to talk to their therapists. Those would get a dashboard summarizing all concerning changes in behavior. A data analytics product.

Let’s take culture and management issues out of the question and look at directly product related failures:

- Premature commercialization. Co-founder Paul Dagum (a Data Scientist) said of himself that he spent too much time selling the tech rather than developing it. In hindsight, he would have wanted to conduct more rigorous clinical testing to evaluate the product and understand its capabilities, before selling it. He was the one to build the initial idea back in 2012.

- Piloting too early. The company entered research partnerships and deploy the tech for limited real-world use in Los Angeles County. The product failed to detect suicidal tendencies in two users, who later tried to take their own life.

- Business ran away, clinical “dragged behind”. A mismatch in how fast different units of the company moved lead to tension inside the company. The Business unit ultimately provided the pace, the clinical development couldn’t keep up, leading to an underbaked product.

What to learn.

- Health MVPs are like airplanes. Safety needs to be built in from day one. A rocket you can blow up a few times before getting successful lift-off. A plane is piloted by a human from day one. The same goes for mental health products.

- Early warning systems aren’t perfect. But similarly to self-driving cars, the tech will never find 100 % acceptance, as it will never be without any fault. The first versions of the product were too far off of what was deemed “good enough”. The company should have communicated the capabilities and shortcomings of the product better. Bad press could have been handled a lot better this way, but error rates in the software should have been lower from the get-go.

- Listen to the clinician. At the time, Mindstrongs idea for this “biomarker product” was not seen as outlandish. Industry experts did see potential in the solution. But a STAT article from 2 years ago mentions, how clinicians input wasn’t valued enough at the company. Most importantly, they weren’t in top leadership roles. The business people steered the ship. Recipe for disaster.

Why this is still a major opportunity.

A stronger focus on prevention could reduce financial pressure on healthcare systems around the world, as every Euro spent on prevention can save multiple in treatment costs. Still, prevention is the tougher sell, for the silly reason that buyers want to buy remedies for their issues in the short term and incentives are set in ways that discourage long-term investment. The German healthcare system is suffering badly due to this.

Germany spent 492B EUR on continuous care in 2024. Only 25B EUR went towards prevention. There is evidence for cost savings through increased prevention methods (accounting for cost of implementation).

It is also clear that the opportunity of ambient health tracking is being attacked. Companies like Function Health or Superpower, providing standardized testing to track true biomarkers and detect issue early, have raised massive funding. Superpower recently announced a 30M USD round, Function Health Recently acquired Ezra. None of those companies are focussing on mental health, though.

In case you are more curious about Mindstrong, you can read some of the original company coverage from 2017 in Wired.

II. Highlights from the Stanford AI Index Report 2025

Plus: a few business cases.

The Stanford AI Index Report is one of the most crucial sources for understanding the real world impact of AI. The report goes over multiple different industries, including health.

The following are the highlights from Chapter 5: Science and Medicine of the Report, relevant to mental health tech. These developments and statistics contain multiple potential businesses.

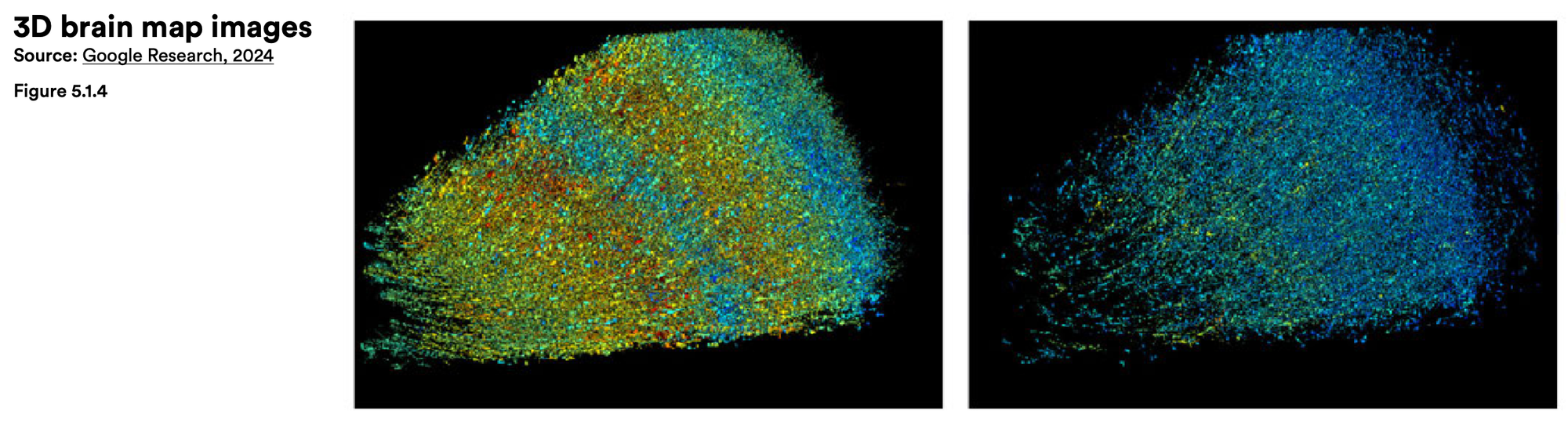

Human Brain Mapping

Google's Connectomics project has reconstructed one cubic millimeter of human brain tissue in unprecedented detail, creating the most detailed map of brain connections ever made. The sample, from an epileptic patient's temporal lobe, was sliced into 5,000 ultra-thin sections and imaged with electron microscopy. This revealed 57,000 cells and 150 million synapses at the synaptic level. This detailed mapping could aid drug discovery in ways that could break the 30-year or so stand still in psychopharmacology.

Imaging Advances

fMRI scans might become more insightful in the next few years. This is the key metric:

More than 80% of FDA-cleared machine learning software targets the analysis of medical images.

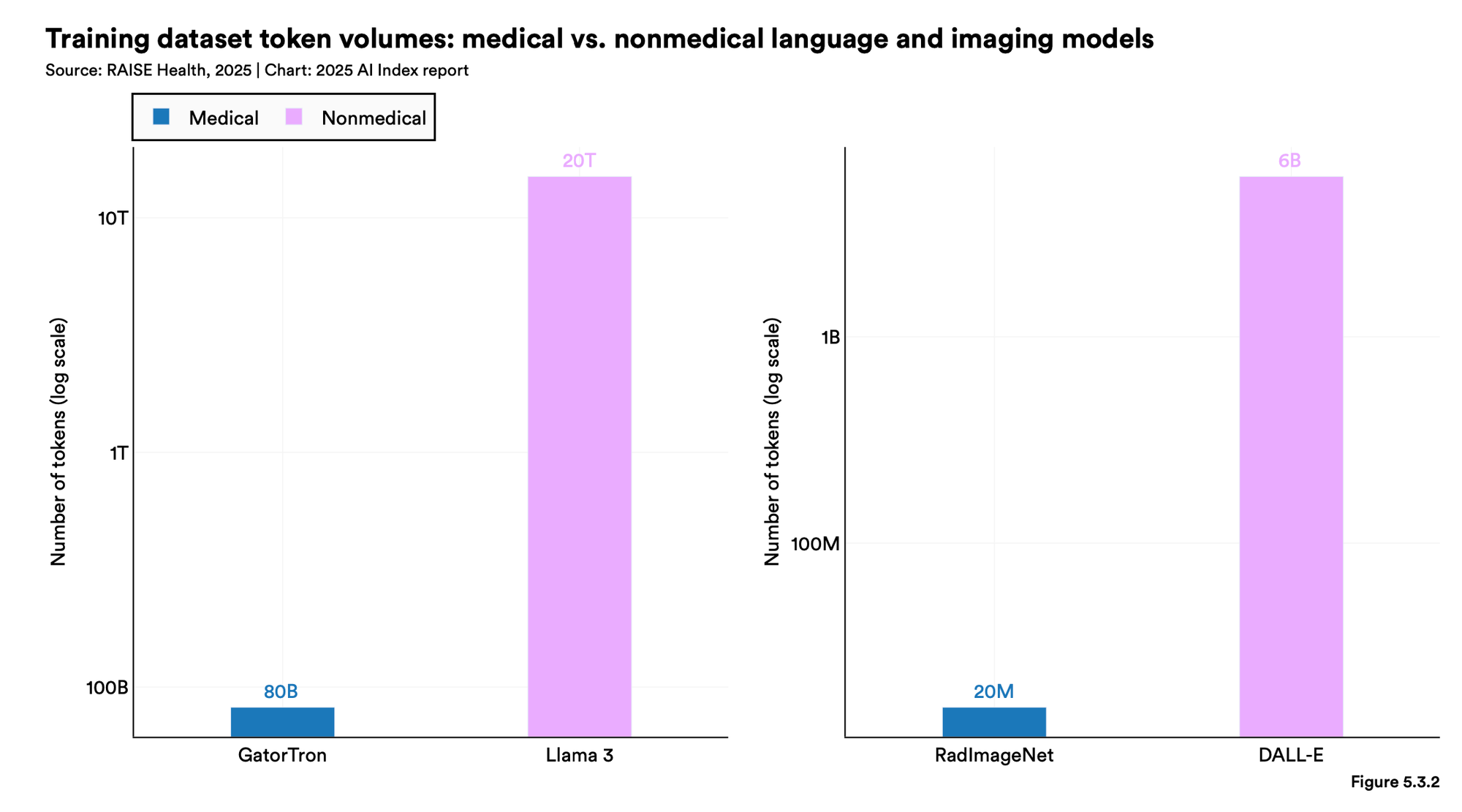

According to the report, AI is mainly applied to 2D data currently. This is partially for complexity reasons, but also due to data constraints. Training sets for medical imaging remain limited. Maybe a private company could be built around acquiring and licensing such specialized data sets?

What makes this difficult is the annotation of the raw images, from with the AI can then learn. For MRI (not fMRI, which would be much more interesting for mental health applications) the biggest dataset is the UK Biobanks’ with around 100,000 scans. The data is open access. Datasets around thrice that size were used for 2D applications of vision models.

The data limitations are shown directly in this graph: medical models are trained on comically small datasets in comparison to non-clinical LLMs and image models.

VLLMs for Mental Health Applications.

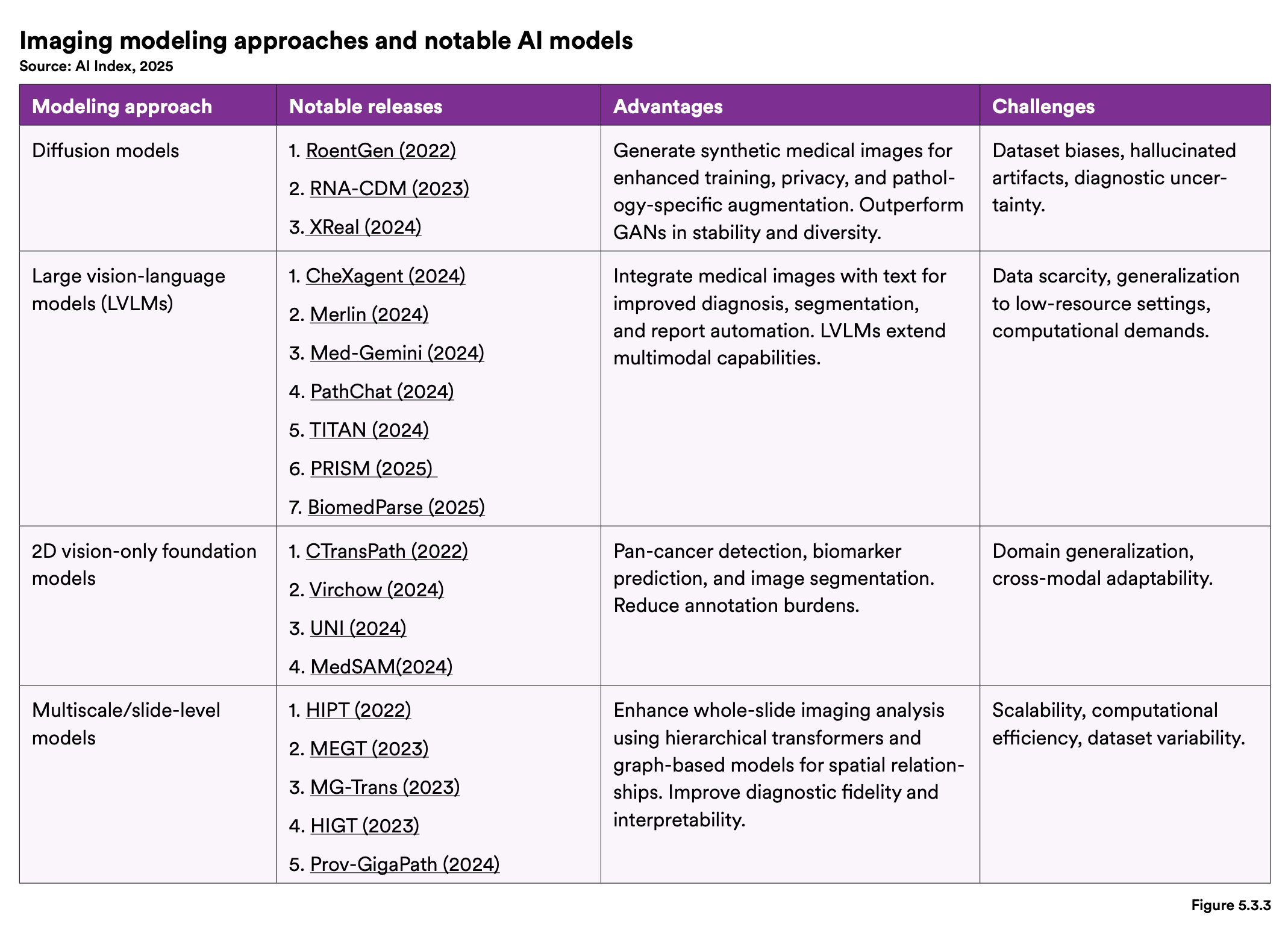

Leading models for medical applications, separated by technical approach, are summarized here with their advantages and weaknesses.

Large Vision-Language Models (LVLMs) would likely show the most promise for mental health applications, specifically models like Med-Gemini (2024) and Merlin (2024).

Why are LVLMs well-suited for mental health applications? 👇

- Multimodal capabilities: LVLMs can integrate medical images with text, which is valuable for mental health where visual observations (brain scans, behavioral analysis from video, facial expressions) combined with textual information (patient histories, symptom descriptions) can provide comprehensive diagnostic support.

- Report automation: The ability to generate automated reports could help mental health professionals document symptoms, treatment plans, and progress notes more efficiently.

- Diagnostic support: The integration of visual and textual data could assist in conditions where imaging plays a role (such as neuropsychiatric conditions) while also processing written assessments and patient communications.

The main challenge for mental health applications is still the "data scarcity" issue, as mental health data is more sensitive and less abundant than other medical imaging data.

Mental Health-Related Tasks for NLP Tech

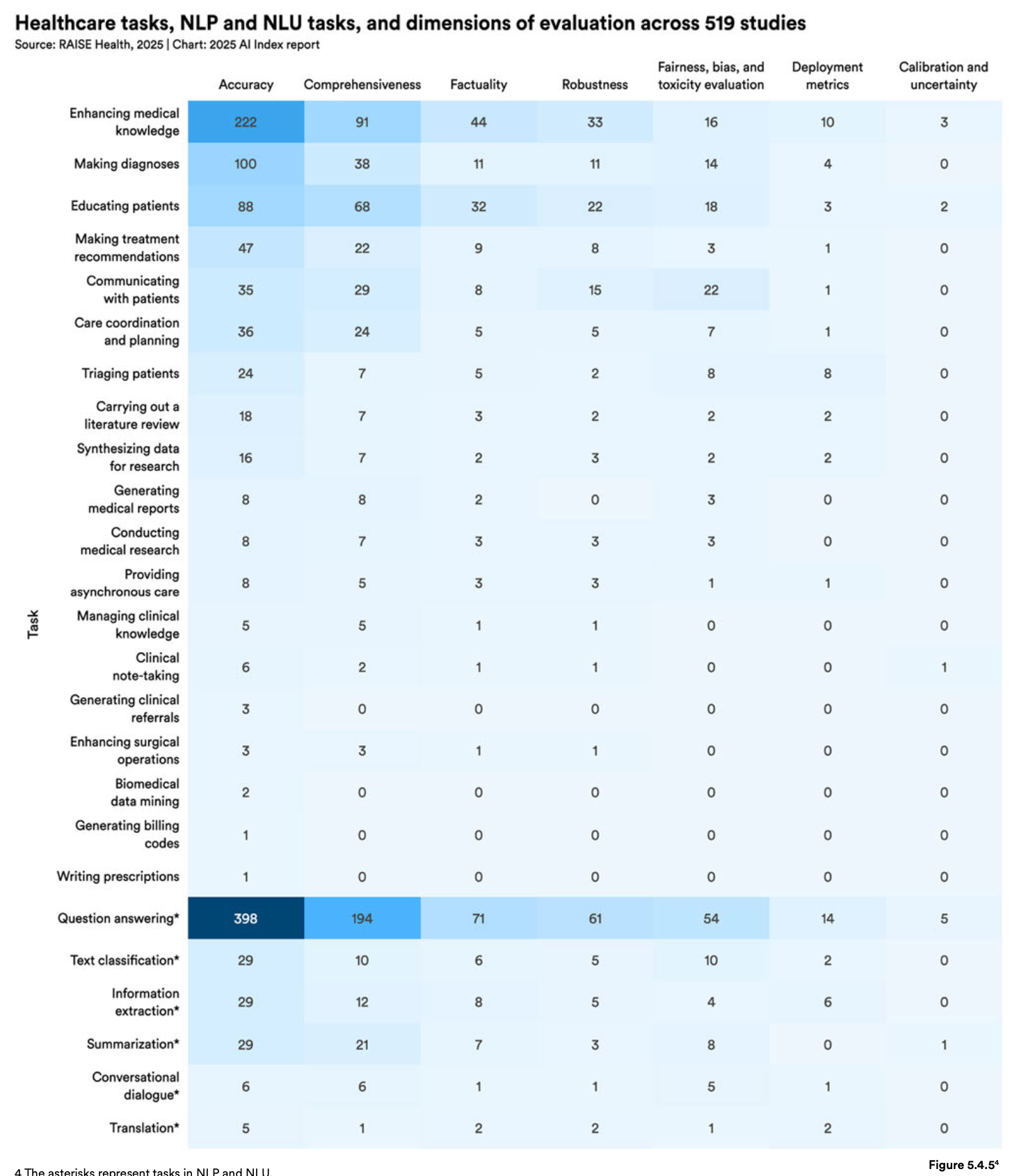

The report contains a short meta analysis of research on NLP tech from early 2024. Diagnostics and medical knowledge were by far the most common applications. Takeaways from this chart relevant for mental health:

- LLMs seem to work quite well for patient education. Psychoeducation plays a significant role in many psychotherapeutical approaches.

- Clinical note-taking and summarization sore quite badly on accuracy. A bad omen for all those AI scribes.

Quick Observations

Some more short observations, to round this section off. Caution: These should be taken with some caution.

- The authors highlight a study on the quality of synthetically generated heart disease datasets. They find it to be feasible and capable of significantly enhancing AI applications. One can only speculate on the transferability to other domains, like conversational therapy. I am not aware of any such studies regarding data relevant to mental health. The advantages of synthetic data, especially around privacy, are even stronger IMO than in other domains. A heart condition is less personal than severe depression.

- mental health is mentioned a total of 4 times in the whole 456-page report. For the next iteration, I predict a separate section on mental health issues.

- In 2023, the Department of Veterans Affairs in the USA was the second-biggest spender in AI-related contracts. They focus on AI for diagnostics, robotic prostheses, and mental health. Meanwhile, I see almost no product targeted at this market.

- OpenAI’s o1 reached 96.0% on the MedQA benchmark. AI is starting to approach “perfect” knowledge. MedQA may be approaching saturation, signaling the need for more challenging evaluations on clinical knowledge. Benchmarks are very difficult to keep out of training data. Training Companies like Meta might even be actively including them to get higher test scores. MedQA has become quite old and was based on existing textbook questions from the get-go. It is time for a new bespoke benchmark. Maybe even a mental health specific one?

Odd Lots – VC Money in Therapy, Labor Statistics, and Sensing a Future?

Food for thought to help you build better this week.

I. The Venture Capitalist Paybook is Breaking Therapy

Megan Cornish explores VC as a major factor in worsening the workload for therapists, potentially leading to declining care. She mentions examples of therapists being fired out of the blue from online therapy platforms, loosing contact to their patients immediately, throwing away the established relationship and trust they had with their patients. According to her, such “business decisions” are quite common in the industry and often times driven by outside capital. Money speaks louder than good intentions. To her, scaling demands of VC are incompatible to continuous high quality clinical care.

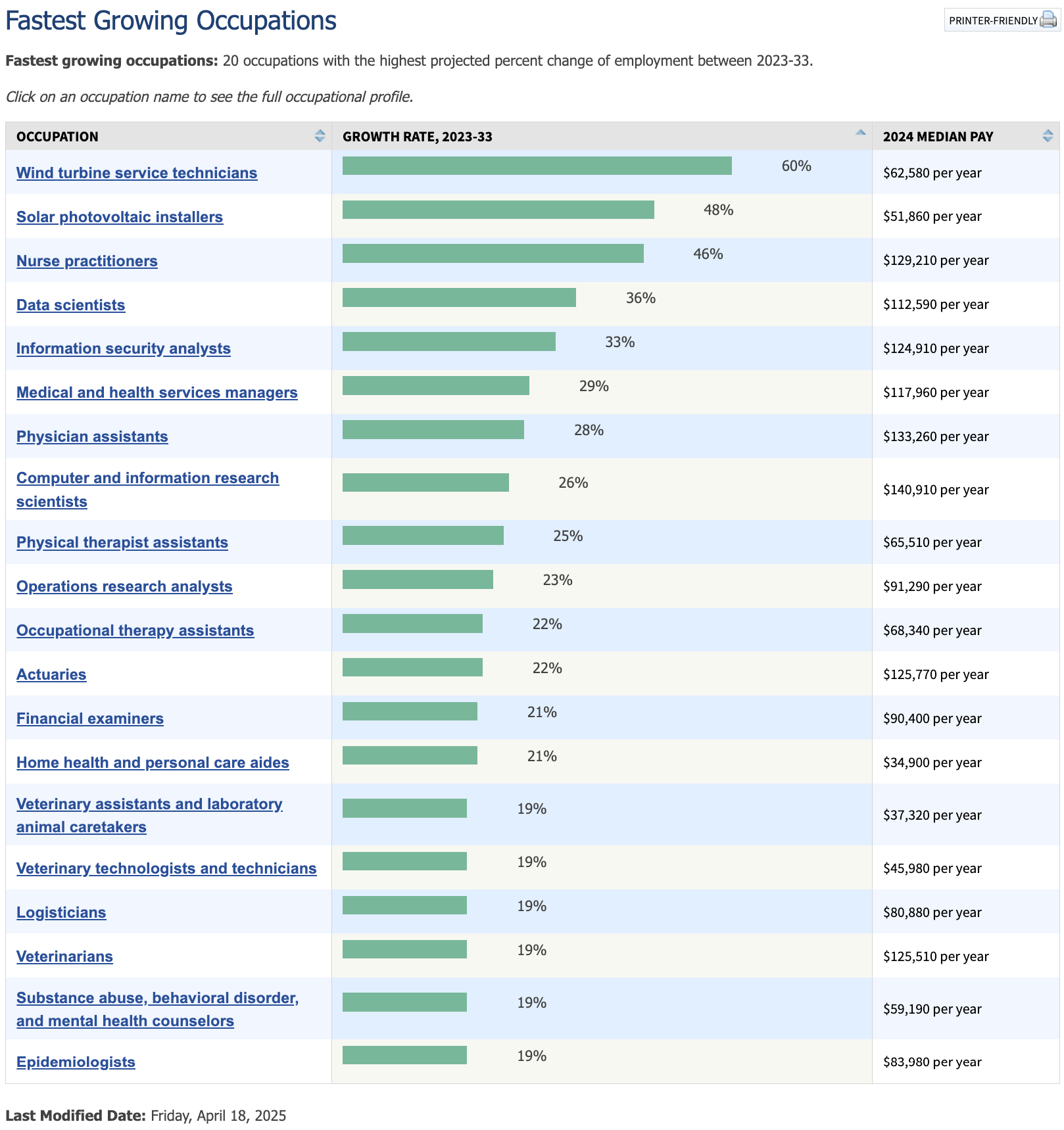

II. Labor Bureau Statistics – Fast Growing Professions in Mental Healthcare

Amongst the top 20 fastest growing occupations, according to to the LBS’ projections, 8 are directly in health. The amount of mental health counselors is expected to grow by 19 % until 2023.

III. Thesis on Sensors

Health sensors are starting to go beyond heart rates. Oura just made CGM mainstream, prompting 2048 Ventures to examine the business case around sensing tech quite positively. The style of this piece, calling sensors “Trojan Horses” seems to fit quite well with Megan Cornish’s take on VCs in Therapy. Read carefully!

Alright, that's it for the week!

Best

Friederich

Get the weekly newsletter for professionals in mental health tech. Sign up for free 👇