06 – Will AI Impact Wellbeing Like Social Media Did?

🗞️ This week's top stories:

- When will LLMs have their social media moment? As with any new technology, there is hopes of quality of life improvements. Social media was hailed as a way to connect with more people, but has turned into a mindless attention-grabbing money machine. Will the same happen to AI? Or will it deliver on mental health?

- Can AI scribes hurt therapy outcomes? AI scribes pride themselves in filtering out the medically relevant information and cutting the chitchat out of patient encounter notes. Prompted by a doctor's personal experience I outline more questions for the therapist profession.

👁️ Odd Lots – News from the mental healthcare sector:

- Spring Health pushes for first AI Mental Health benchmark.

- TikTok is coming for Headspace?

- What OpenAI's new HealthBench is and isn't.

- Nature: Efficacy of app-based mental health interventions.

- SpellRing: A Smart Ring for Sign Language

I. Back When People Thought Social Media would be healthy.

And then expectations met reality.

The mental health sector has been swept up in the AI hype like most others. LLMs for therapeutic applications have been a major topic since the launch of ChatGPT. Since 2022, a multitude of applications has launched. A new wave of AI-based interventions has arrived. The little evidence published thus far on the benefits and safety of these treatments has mostly been provided by the makers of those various chatbots themselves.

Whilst it is great to see so many companies build evidence-based products, their research is limited and more focussed on marketability than finding every possible risk and issue with their system. The incentive is not to build the safest, but the most profitable product. Add to that the go-fast-and-break-things mentality applied by most startups and VCs breathing down companies' backs to turn a profit quickly. Now you got the same recipe that turned Facebook from a network into an attention grabbing machine.

What do I want to get at? I think, that there might be a real risk of LLM based interventions taking the same path as Social Media when it comes to mental health and wellbeing. Not only could this hurt those already suffering but also make more people sick.

AI it is currently what Instagram was in 2013. It already had hundreds of millions of users, and it changed society massively. The first generation of high schoolers grew up online, not only in the form of text, but with images and a (bit later) video. TikTok was still not out for another 4 years (when it entered western markets through the musically merger) and nobody knew what the heck Reels were. Back then, there was plenty of research showing POSITIVE effects of social media on wellbeing:

Early studies of social media indicate that features of Facebook promoted positive mental health outcomes. Investigations show that reminiscing through photos and wall posts improved mood and self-soothing (Good et al., 2013) and that a larger Facebook network was linked with increased perceived support and reduced stress (Nabi et al., 2013). Self-disclosure on Facebook buffered stress and enhanced life satisfaction among college students (Zhang, 2017), and a review of Facebook-based support associated it with lower levels of depression and anxiety (Gilmour et al., 2020). Some work that surveyed general social networking found mixed results, but the early research consistently highlighted social support, emotional benefits, and psychological resource enhancement as key affordances.

This summary was compiled using research tool Elicit.

But let me start from the beginning of digital addiction:

The internet was introduced in 1991. The term “Internet Addiction Disorder” turned up in 1995, introduced in his newsletter by the Psychiatrist Ivan Goldberg. He meant it as a joke, but the severity of the issue was recognized quickly. Clinical psychologist Kimberly Young published the first case study on the new disorder in 1996.

Facebook launched in 2004 and became publicly available in 2006. The term Facebook Addiction Disorder was introduced in 2011 to pop culture. It then quickly morphed into social media addiction, which we know today.

Facebook was built as a way to connect. The idea was not to create an addictive, brainless place on the internet. The incentives, to push the platform in that direction, came quickly with the model of monetizing attention through ads. More time spent equals more ad spots sold. All social media platforms existing today only survive by doing the same as Facebook did, pushing for a free platform financed by advertisers. A social media network with the idea of simply connecting people would not make money today. It needs to serve content that keeps users engaged and scrolling.

All of this has culminated in the following:

In almost all countries, at least 50% of 15-year-olds spent 30 or more hours per week on digital devices, with a notable minority – ranging from 10% in Japan to 43% in Latvia – reported spending 60 or more hours online.

The quote is from a recent OECD study on children in the digital age. Every other child spends at least 4.3 hours a DAY on digital services. That is equivalent to at least 4 full-blown hobbies.

Imagine a kid playing soccer, the piano, swimming and painting. Now replace all those activities and insert: Instagram & TikTok. What a horrible childhood.

Why do I focus on children here? They are the most vulnerable group. Their wellbeing suffers first when it comes to significant societal changes. Their experiences are an early warning sign of what is to come for the rest of us.

General AI will do the same. We already see signs of emotional escapism and addiction in LLM usage patterns. There are more companies allowing you to produce your perfect AI girlfriend than automobile industry lobbyists in Berlin.

Early evidence suggests AI benefits to mental health. But like Facebook decided to prioritize watch times over the mental health of its users, I can see OpenAI going a similar path. Their recent hire of former Instacart CEO Fidji Simo is a clear sign, of how OpenAI will monetize. Simo built ads at Instacart and other companies before. She will probably do the same for OpenAI as their new CEO of applications. She will be taking over the product side of OpenAI, whilst Altman focusses on research and compute.

OpenAI build the foundational models that many other companies base their chatbot applications on. Their push for monetization will probably also influence the way the models are built. In fact, it already has. The company recently was forced to roll back an update that made ChatGPT overly agreeable. Why do such a thing? To keep users engaged for longer. The same way you use social media because you like your friends, you use your preferred chatbot because it is nice to you. OpenAI involuntarily tested the boundaries of that thesis and got quite a bit of bad press on it. But only because the change in style was quick and unnatural. If such an adjustment was stretched out over the next 10 years, ChatGPT being more agreeable than the average Donald Trump advisor would raise a lot fewer eyebrows.

Risk no 1: AI addiction as a contra-indication for AI treatment? So let's look at specialized mental health applications now. AI has been hailed as a new supertool that will bridge the eternal treatment gap and provide care to those not wealthy enough to afford real psychotherapy and those waiting months on end for their first session. The whole world is getting squeezed into a chatbot interface currently. The next evolutionary step of social media addiction will be AI addiction. Fake friends, whom Mark Zuckerberg wants to introduce at scale at Meta, fake emotions and fake conversations. All those will become addicting. The same way we wouldn't treat social media addiction with social media, we will probably not treat AI addiction with AI.

AI addiction will exasberate mental health issues. Once it becomes the cause I doubt many will still want as the treatment too.

Risko no 2: Startups need to build profitable interventions. This is basically the expecation once you raise money from third parties. This is perfectly shown by the Mindstrong fiasco which I covered last week. The company didn't listen to their clinicans enough and made a dangerous & ineffective product due to financial pressures and mismanagement. I doubt many will be able to avoid short term thinking VCs.

I know that I am painting a very dark picture. Do I really think any AI mental health product is doomed? No. But I see the previously outlined as major risks that individual companies can't really address.

The good news: mental health professions seem like the only AI-proof work to me, for the forseeable future. But by virtue of being part of the problem, AI mental health products will have a hard time the more predatory non-mental health applications will get.

II. How AI scribes could hurt healthcare work.

Note-taking is not only a necessity in providing proper care, but also an important step in the treatment. Memory is integral to mental healtcare.

In an article published in The New England Journal of Medicine, Doctor Gordon Schiff highlights one core weakness of AI-generated patient documentation: It cuts out everything irrelevant, meaning non-medical. The mentioned AI scribe, Microsoft Nuance DAX, and others pride themselves in removing the chitchat from patient conversations. Schiff says that this makes interactions more transactional than they should be. He also highlights the temptation to sign off quickly on the AI-generated notes without careful review and revision.

But it is not only the temptation to simply sign of on AI notes. Schiff argues that by not taking the time to write notes, treatment plans also suffer. They are part of the notetaking and require conscious effort to be adjusted to the individual's needs. This cognitive effort is taken out of the equation.

He laments a loss in social history of his patients as a physician. Now what about those in the healthcare system, who heal work of of social history: therapists?

Experience matters in mental healtcare. Getting an intuitive grasp of a disorder, but also concisely describing and structuring it is what you learn over years of practice. Remembering personality is also core to empathizing with individuals. AI scribes as memory replacements will potentially worsen therapists abilities to build on previous sessions as they dont recall the nuances they used to embed in their notes and thereby think through. Mental automation will make care worse, potentially.

Schiff mentiones how the time crunch under witch doctors work lead to dehumanizing shorthand documentation. Moving from handwriting to digital notes didn't help this problem. AI could be a benefitial in that regard. But I doubt it will be a net positive to therapy. AI scirbes might take away one burden of overworked therapists but will worsen their ability to provide high quality care by taking away the "burden" of memory as well.

Last note: I find it very funny that the title of that an AI-critical article includes one of those extra long dashes. They are generally acknowledged as the biggest telltale sign of AI generated text. I am not suggesting anything here!

Odd Lots – News in Mental Health Care.

Curated news and insights to help you build better companies

I. Spring Health pushes for first AI Mental Health benchmark

Spring Health proposes an independent benchmark, for which they are the founding contributors. Their vision is quite blurry, with little in terms of concrete goals. I will keep you up to date on any news regarding the benchmark.

II. TikTok is coming for Headspace?

In another halfhearted effort to actually promote mental health instead of hurting it, TikTok introduced a new mediation feature that nudges users to put their phone away and go to sleep at a healthy time. I already gave my take on why their new "feature" is nonsense here:

III. A take on OpenAI's new HealthBench

OpenAI unveiled HealthBench, a new benchmark for evaluating clinical assistant LLMs, this week. Graham Walker (MD) provides a great explanation of what the benchmark measures and what it doesn't:

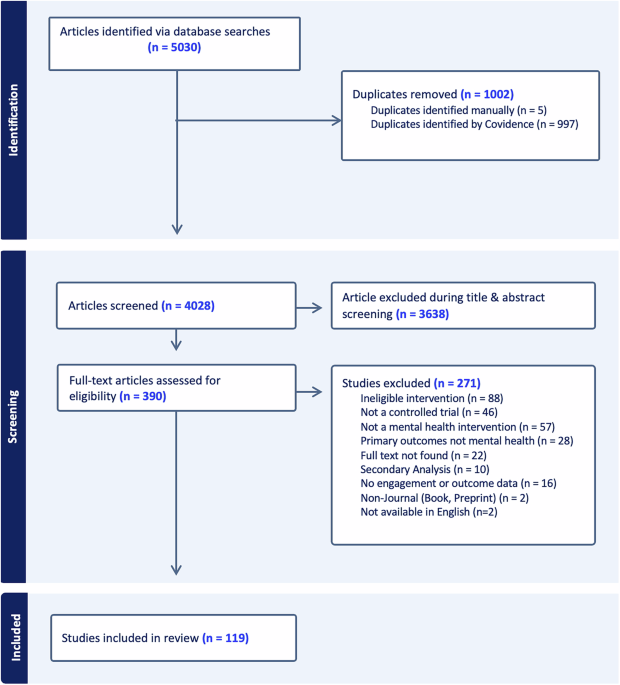

IV. Efficacy of app-based mental health interventions.

This meta analysis by nature provides a great overview of current mental health apps and their efficacy. Some highlights:

- Most interventions were grounded in one or more psychological frameworks. Cognitive-behavioural therapy (CBT) was the most commonly used (60.5%, n = 72), followed by mindfulness-based approaches (23.5%, n = 28).

- The analysis demonstrated that digital interventions were significantly more effective than control conditions in reducing symptoms of depression, anxiety, stress, PTSD, and body image/eating disorders.

- No significant effects were observed for social anxiety, psychosis, suicide/self-harm, broad mental health outcomes, or postnatal depression.

V. Smart Ring for Sign Language

Researchers at Cornell University have unveiled a smart ring built to track fingerspelling, an aspect of American Sign Language (ASL). The major breakthrough lies in the miniaturization of the technology. They have summarized their findings in a preprint.

Alright, that's it for the week!

Best

Friederich

Get the weekly newsletter for professionals in mental health tech. Sign up for free 👇