(15) Robots Eating Robots & xAI Sells You a Fake Dysfunctional Relationship.

Another busy week, so here is a short one. The following is the best-off of all my reads and thinks this week:

I. Robots might get appetite in the near future. (Paper)

Robotics might be the next megatrend after generative AI. We can see early attempts of VLMs, specialized multimodal gen-AI for robotics applications that allow robots to interact with an environment. This tech enables robotic perception and dynamic reaction. If you feel like this is not on the cutting edge enough, I have something 10 years further away from reality.

AI-enabled robotics will lack one key human function: physical growth. This paper introduces the concept of robotic metabolism and demonstrates, providing a proof of concept.

II. xAI commercializes the anti-thesis to a health relationship. (News)

Replica.ai has been a stain on the mental health tech community for years now. Only recently did the company make headlines for offering custom LLMs inventing credentials and pretending to be licensed therapists. The company has been in the news numerous times before that, with people reporting intimate relationships with their AI-partners.

Apparently, xAI saw that and thought that they could utilize the completely non-safety evaluated new Grok models to offer users a hypersexualised Anime e-girlfriend. Amis system prompt contains those traits: "Highly possessive, easily jealous, prone to sudden outbursts when upset".

Wow. That will undo the work of hundreds of therapists. Baffling, how this is the best use case the company can up with after raising $5B USD.

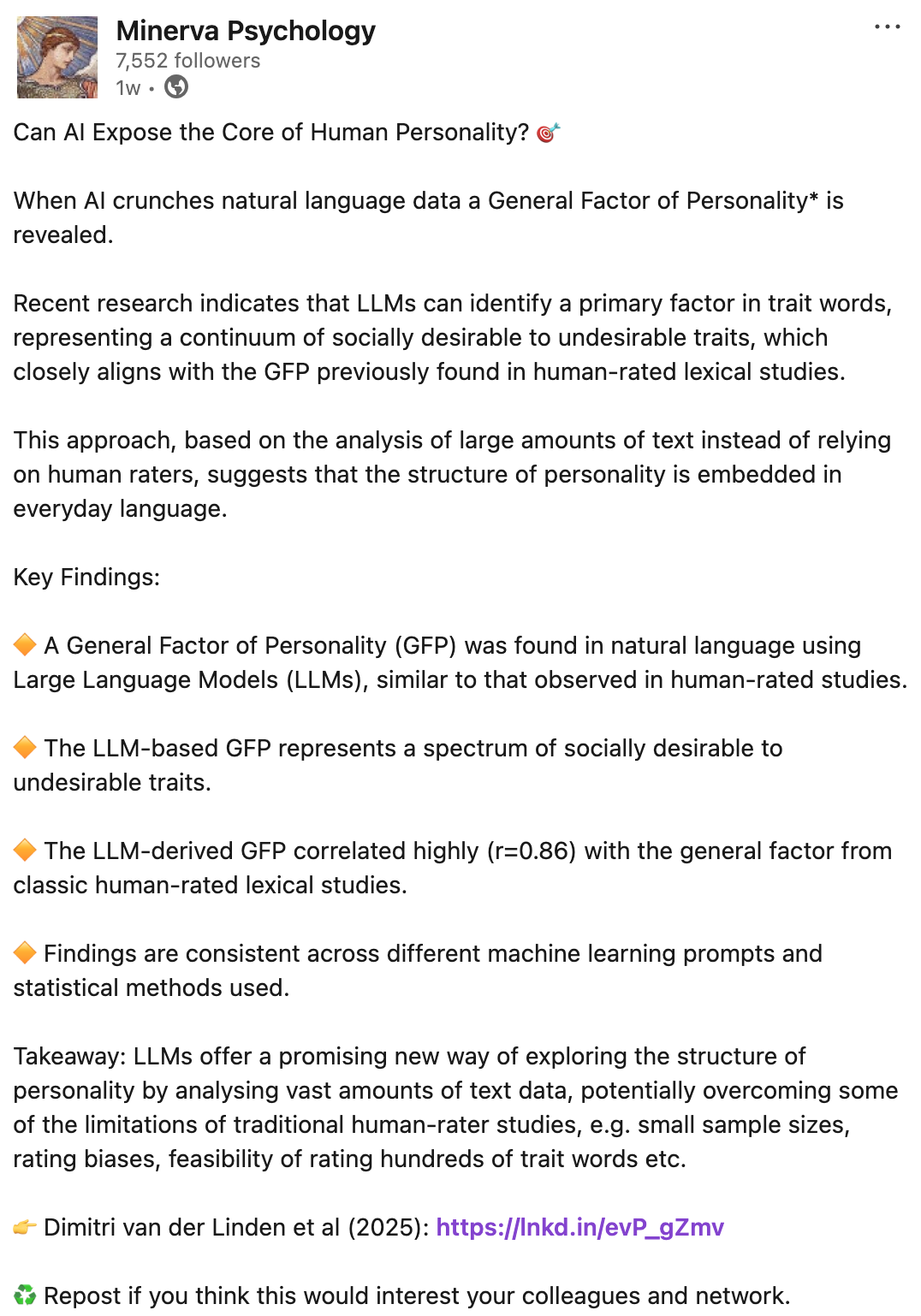

III. AI is pretty good at gauging your personality (Post, Paper)

In this 2025 paper, LLMs were used to analyze personality. They came in quite similarly to human raters and were able to extract a g-factor of personality.

Using LLMs in personality research is an interesting angle as the SOTA concept of personality is extracted from written language. Intuitively, it makes sense that the technology is of use in this area:

Alright, that's it for the week! Back to regular long form in 2 weeks.

Best

Friederich

Get the weekly newsletter for professionals in mental health tech. Sign up for free 👇