(18) Trivialization of Therapy & DiGA Efficacy

AI is trivializing therapy and eroding trust in professionals. Regulation, like the HB1806 introduced in Illinois last week, can help.

For those who read last week's DiGA report, as promised, here is my sheet providing you the opportunity to plug in your own assumptions.

Last week's DiGA analysis focussed purely on the economic viability of providing DiGAs. After looking at the feasibility of providing a treatment, the natural next question is: does that treatment actually help anyone? The foundation to a tool being helpful is for it to be safe:

I. AI safety.

The last few weeks saw a lot of news about the safety of Ai applications in healthcare. There is one major theme: trivialization of therapy.

OpenAI has recognized the size of the healthcare market by now. With Healthbench and a recent study in Kenia on doctor-AI-tandem decision-making, they have made their first formal forays into healthcare. The open weight models GPT-oss are also attractive to the healthcare sector as commercially usable, locally running models.

Generalist LLMs are being used for mental health advice far and wide, so it should be welcomed that OpenAI is making their models safer, right?

But OpenAI has not necessarily been using much clinical rigor. At the ChatGPT-5 presentation, they pulled this:

With the launch of GPT-5, OpenAI has begun explicitly telling people to use its models for health advice. At the launch event, Altman welcomed on stage Felipe Millon, an OpenAI employee, and his wife, Carolina Millon, who had recently been diagnosed with multiple forms of cancer. Carolina spoke about asking ChatGPT for help with her diagnoses, saying that she had uploaded copies of her biopsy results to ChatGPT to translate medical jargon and asked the AI for help making decisions about things like whether or not to pursue radiation. The trio called it an empowering example of shrinking the knowledge gap between doctors and patients.

Source: MIT Technology Review

You can also watch a video clip of that moment here.

The company is actively leaning into the trend of using ChatGPT not to support, but to effectively replace, a doctor. Whilst I can see the "help me understand doctor-lingo" use case, in this example they even went a step further: they asked for help in making healthcare decisions. The doctors didn't agree on Carolina Millon's further treatment plan, so they asked her to decide. Naturally, she off-loaded the decision to ChatGPT. Her husband goes on to say how ChatGPT gave them agency. I would argue that this was a false feeling of safety, especially when this was done through "gaining knowledge" as he puts it. The probability of her making this decision on partially wrong knowledge is extremely high.

Morbidly funny side note on that: ChatGPT gave a 19th century sickness to a user.

Recently, the APA has called on the Consumer Product Safety Commission to put stricter guardrails up for the development of AI applications in psychology (The letter). One of the main issues cited in current developments is an erosion of trust in both the tech (for not properly handling data) and the profession of psychology (by simulating a "confidential therapeutic environment").

Realistically, the APA is completely powerless here, and effective collaboration with the regulators seems rather unrealistic in the current political environment of the US.

... unless individual states take the wheel, like Illinois with the signing of the AI safety, signing HB 1806 into law last week. The law is designed to stop AI systems from acting as therapists. This has had real world impacts already: Slingshot AI had to disable Ash, their "AI therapist" for the state. They then went on to rally their users to fight back against this grave injustice.

Goes to show: Regulation does work. But companies will take every freedom to market their products to customers in the greatest need (and spending mood), until the very moment they are forced to stop.

II. Are DiGAs based on good evidence?

I came across a post by Torsten Schröder, MD, PhD on LinkedIn this week and want to expand upon the argument he provides. Here is a snapshot:

- Schröder presents a meta-analysis that looks at the quality of DiGA studies, which providers need to conduct to prove the efficacy of their products.

- The meta analysis uses a statistical method (RoB2) that is designed for double-blinded trials. DiGA studies don't work as double-blinds, which is why they score inherently in the evaluation.

- The conclusion: new methods are needed to evaluate digital therapeutics, focussing on patient endpoints (e.g. Wellbeing).

You can find the paper here if you want to read for yourself first.

Whilst it makes sense to criticize this study for how it draws its conclusions, there is also a lot of legitimate criticism in there:

- Approval studies are methodologically weak: bias is (partially) high because of bad designs, missing data, and broad self-reported outcomes.

- Designs vary so much that you can’t reliably compare one DiGA against another.

- They haven’t improved—guidelines haven’t changed, so procedural flaws keep repeating.

- Reporting is poor—study plans and details aren’t made public, limiting transparency.

- There are no official guidelines by the BfArM on how it evaluates DiGA approval-studies.

Takeaway: The system needs standard measures, longer observation, and real-world data to prove sustained benefit. The amount of DiGAs is still very limited. That is partially due to the massive cost of clinical trials required for permanent approval. Clearer standards could bring down cost, increase transparency and improve the quality of assessments more extensively. But all that requires an agile regulator to constantly tweak and publish the rules it applies to judge these studies.

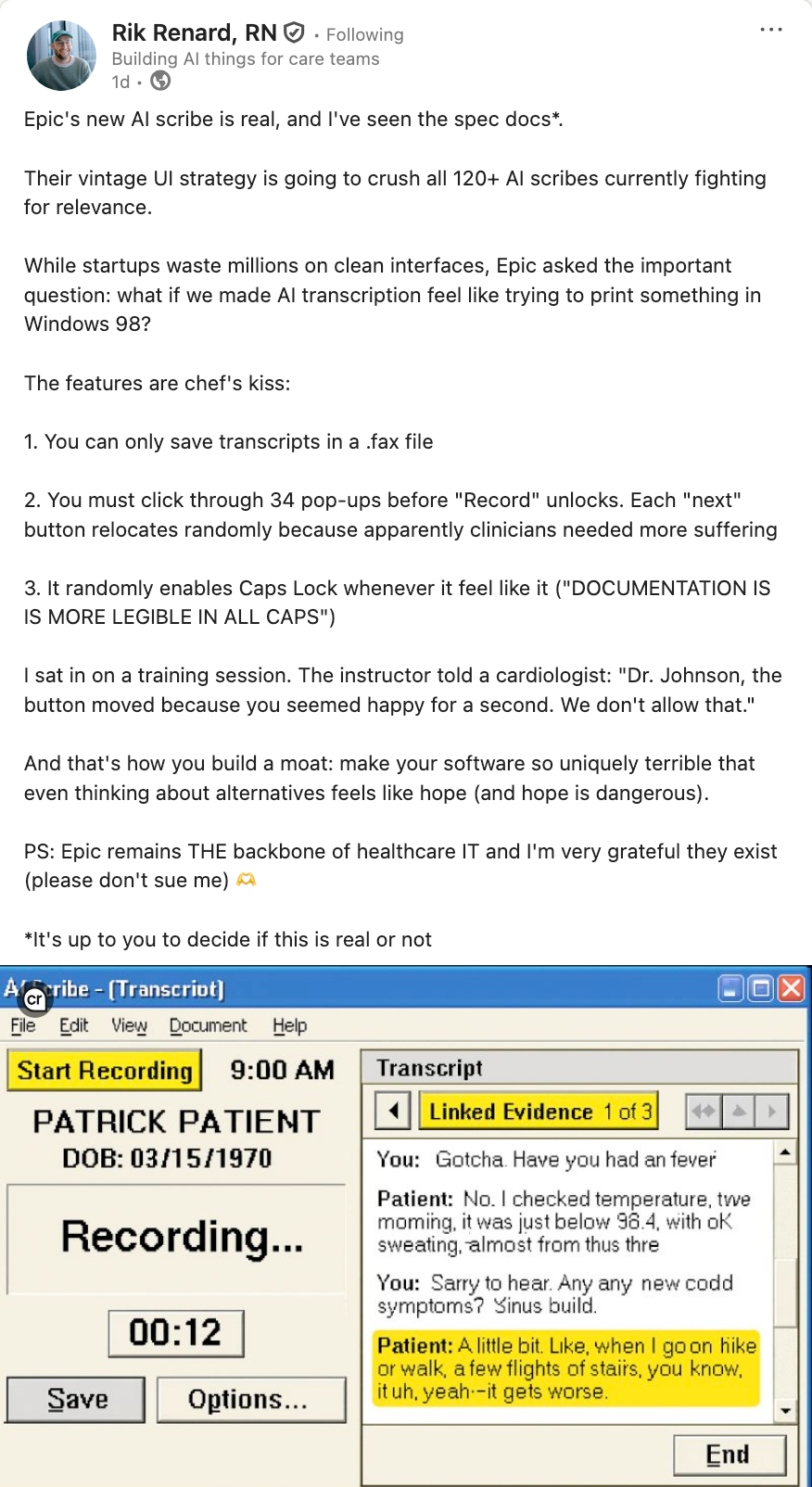

Alright, I will leave you with this highly authentic announcement:

Have a great week!

Best

Friederich

P.S. Fancy a chat? Put yourself in my calendar here.